Research and Evaluation

Research and Evaluation have similarities in choices points in design, ethical considerations and tools used. Both are subject to particular scrutiny when thinking about working with vulnerable people. Research and research evaluation are distinct fields and separate paragraphs are devoted to each.

Evaluation is always applied research, undertaken to understand the impact and perhaps the process of an intervention. The evaluator will present and interpret data, offer some judgements and often recommendations. Evaluation as I practice it is more geared towards informing process development and the more cutting edge developmental evaluation which is used in innovative and more ambiguous contexts.

Research may be blue skies or empirical it often involves analysis of literature and other media. Research can be applied and be intended to inform the development of a social program or policy. Applied research and evaluation are the backbones of good social interventions, social and micro enterprises and organizational development. Creating a relationship of theory and practice and having a mutual back and forth between the academy and knowledge building, and what is developed and discovered in the field is my preferred style of working.

It is important to devote time and care to the design and execution of empirical research and evaluations within social justice, social welfare and human-animal interactions arenas to maximize the well-being of human and animal participants.

Research

Research methodologies and methods can be harnessed in a variety of ways to support policy, program, organizational and enterprise development from blue skies thinking through various applied research activities. Research might involve a literature review or desk based reviews of existing practices and policy reports, running consultation or focus groups, running quick 'door step' interviews to get opinions, running a survey or more in-depth interviews. It could involve working with groups using a range of participatory or more creative data-generating strategies like using site walks or various visual tools like photographs. Over my career I have become skilled in a variety of research activities and used multiple approaches.

Good research informs the development of programs and organizations, helping them shape their direction and offers in an informed way. Research does not always have to be undertaken as an external contractor and working with organizations I have developed Action Research teams that both explore an issue internally and develop their capacity to do so.

Evaluation

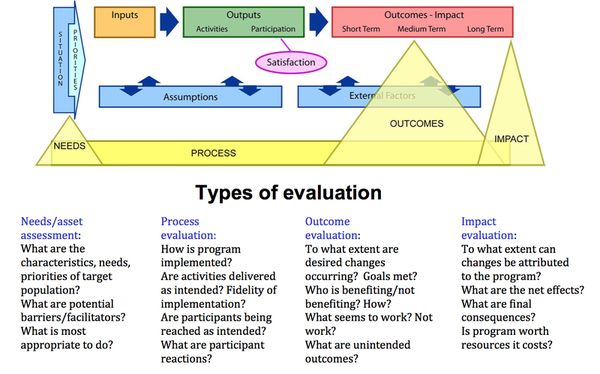

Evaluation uses research tools and approaches, such as interviews, surveys and participatory research tools. However the purpose of evaluation research is that of understanding the impact of an intervention or activity on the constituency designated as its target group. It is likely to involve the discovery of unintended outcomes, which could be benefits or dis-benefits. Evaluations look at longer-term impact and at the wider groups of stakeholders. There are various types of evaluation:

- Formative reporting on the processes of the intervention; its design, deliver, management of an intervention as well as the outputs and outcomes

- Summative evaluations making an assessment of the outcomes and outputs of an intervention or program.

Both summative and formative evaluations differ significantly from the monitoring and auditing of interventions or programs. Evaluations often include discussion of finance and other resources and the return on investment.

- Developmental evaluation works in lock-step with consultancy and coaching as the findings from this type of evaluation are used in real time to assist and shape the development of an initiative or intervention.

Evaluations collect and interpret data, offer some judgements about effectiveness against intentions. Evaluations are not for the faint hearted or muddle minded. Evaluations should ideally be designed into an intervention at its planning stage. The evaluation strategy will generate crisper thinking about what is intended and the articulation of the various elements of the intervention.

Evaluation is a useful process with the potential to inform the development of an intervention and generate discussion about organizational development. Evaluations strengthen strategic and management decision-making.

Evaluations used with human-animal interaction programs should be created in such ways to reflect animal well-being and welfare, to question the role of the animal and to take account of how data is captured about the animal's involvement.

Theory of Change and Logic Models

There is much to be gained from thinking through the theory of change which underpins an intervention and combining this with working with logic models, of which there are a variety.

Introducing Logic Models

A short paper describing various types of logic models.

Logic_Models.pdfOther places to look

www.SquareCubeConsulting.com for evaluation and consultancy in social impact

www.BlueDogConsulting.com for research and evaluation in AAI and HAI

Integrating Evaluative Thinking into Program Design